Has anyone heard about Sora AI? Sora is the latest groundbreaking tech by OpenAI, where users are able to create fascinatingly realistic videos from a simple command or prompt.

Let’s learn further about Sora here!

Table of contents

What is Sora?

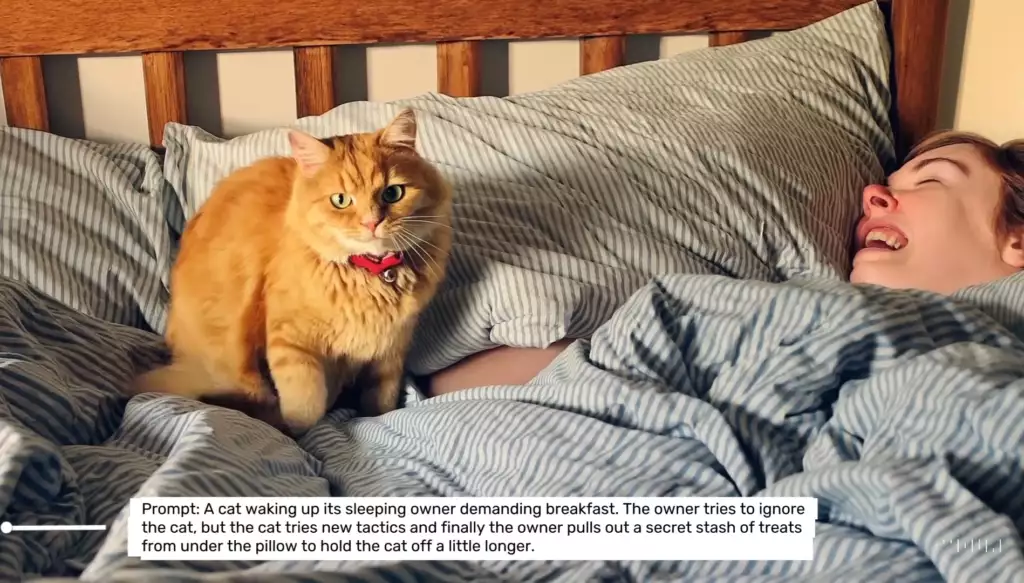

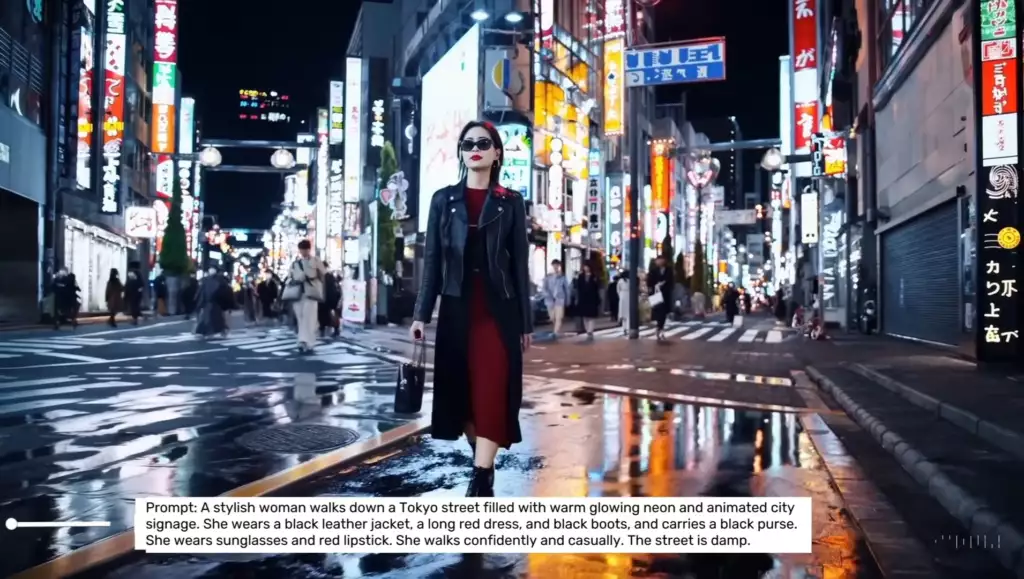

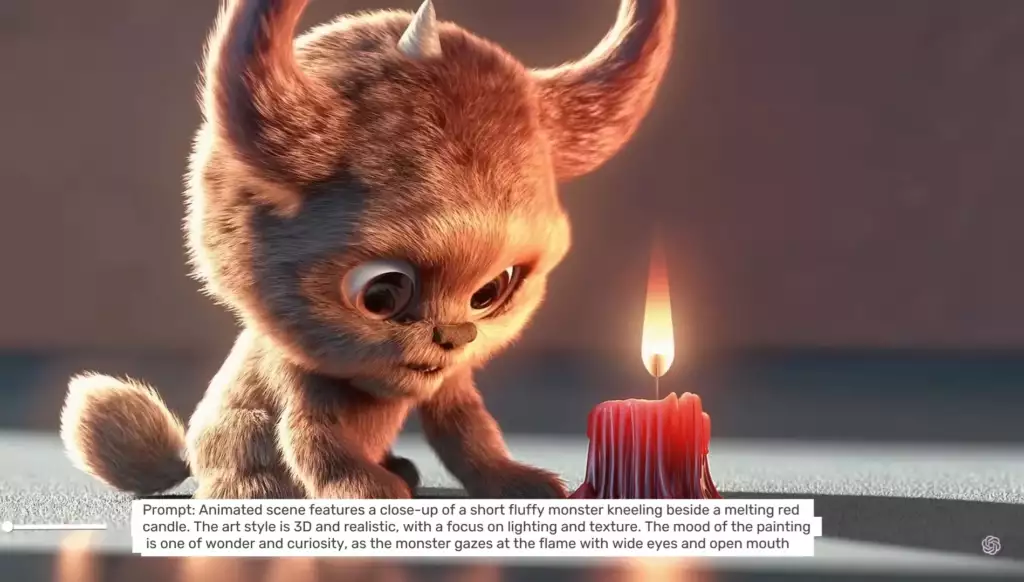

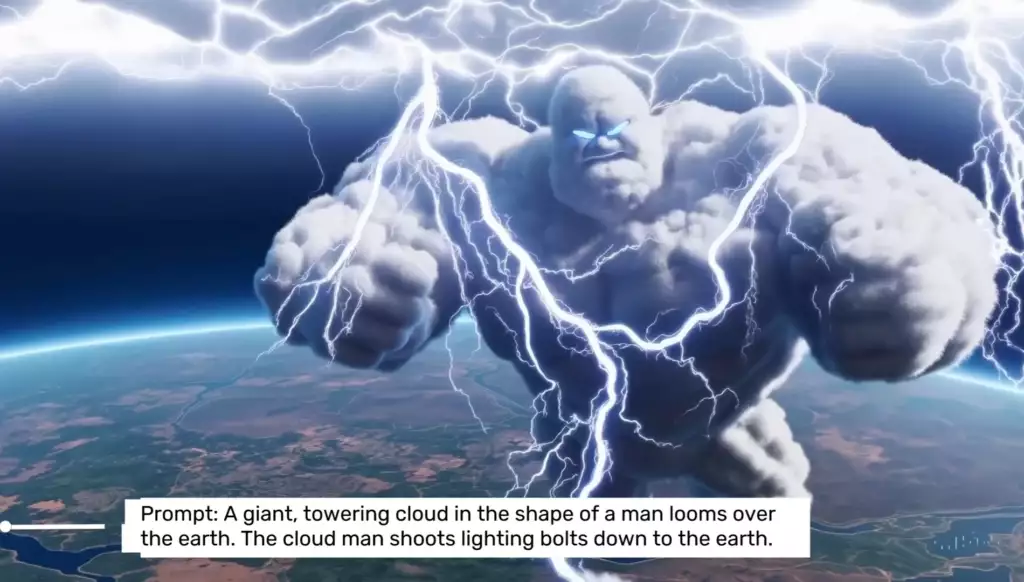

Sora, a new technology breakthrough, is a text-to-video generator model that can produce videos for up to one minute while adhering to the user’s prompt and preserving visual quality.

We can also extend that by asking the tool to create additional clips in sequence. This tool was created by OpenAI, the creator of the famous ChatGPT and Dall-E.

It can create additional clips in sequence and produce lifelike videos with falling snow particles, precise fluid movements, and reflections in mirrors.

Through Sora, you can simply give a command or prompt as above; then you will theoretically receive a video corresponding to a prompt you provide.

How does Sora work?

Sora operates in the same way as any other AI image generator, just with a lot more steps. Sora utilizes a diffusion model, just like text-to-image generative AI models, DALL·E 3, StableDiffusion, and Midjourney.

This begins with each video frame being filled with random noise and machine learning is then used to transform these images into something that resembles the description gradually.

In other words, it functions by taking a video and turning it completely static. Afterwards, it is trained to reverse the static, producing a clear picture or video, in this case.

As part of its dedication to transparency, OpenAI’s website provides a comprehensive explanation of the model’s operation, which you can check out here.

Read more: ChatGPT, Bing Chat, and Google Bard: Which One’s Best?

What are the strengths of Sora?

Sora boasts remarkable strengths, as evident in the released videos, surpassing anything we’ve seen before. Sora employs a recaptioning technique similar to DALL·E 3 to ensure accurate user input capture.

In this case, GPT is used to rewrite the user’s prompt while adding more detailed information before producing any video. It is a method of augmenting the prompt automatically.

Sora can create complex scenes with multiple characters, distinct motion styles, and precise details of background and subject. To enhance the prompt, Sora really understands how those items are represented in the real world.

It generated videos with accurate lighting, reflections, and natural human characteristics. Besides, it can produce multiple shots that faithfully maintain the visual style and characters in a single generated video.

What are the limitations of Sora?

OpenAI lists a few limitations on the current version of Sora despite its remarkable powers. Sora struggles to understand physics accurately, leading to situations where “real-world” physical rules aren’t always strictly adhered to.

For example, in a video of a basketball hoop explosion, the net appears to be repaired after the hoop explodes.

Model Weakness: An example of inaccurate physical modeling and unnatural object “morphing.”

Other than that, objects’ spatial positions may shift abnormally or unnaturally. Objects, for example animals can appear out of the blue and their positions will sometimes overlap each other.

Body parts may randomly disappear and reappear, while people emerge out of nowhere and feet float into the ground.

What are the risks of Sora?

Similar to other text-to-image models, Sora carries certain risks, including the potential to produce misinformation and harmful content. It has the ability to produce inappropriate videos with graphic or violent materials.

Contents like Deepfake videos have the potential to affect and influence public opinion. Furthermore, biased training data can result in unfair representations in the produced videos.

How can I access Sora?

Sora is currently unavailable to most users. OpenAI is being cautious when releasing new tools. Thus, a small group of individuals known as “red teamers” will test the tool in the first step to look for serious risks or harmful areas.

Afterwards, a select group of designers, filmmakers, and visual artists will have access to it to learn how the tool functions with creative professionals.

Even though the public release date has not been set yet, Sora will probably be made available to the general public sometime in 2024.

However, given its strength, we would anticipate it to be found under GPT’s pay-to-use model.

How does Sora AI transform our lives?

Social Media

We may use Sora to make short films for social-media video platforms, such as YouTube Shorts, Instagram Reels, and TikTok. Sora will especially be suitable for creating content that is difficult or even impossible to make.

Marketing and Advertising

Besides being difficult, producing advertisements, promotional videos, and product demos has been expensive. AI text-to-video technologies such as Sora will help significantly reduce the cost of this operation and increase overall efficiency.

Concept Visualization and Prototyping

Even if AI video is not utilized as the finished output, it might be useful for effectively illustrating concepts. Filmmakers can use AI to create scene mock-ups before starting to film and designers to generate product videos before they are built.

Synthetic Data Generation

Synthetic data generation is a process in which new data is created manually or automatically using computer simulations or algorithms as a substitute for real-world data.

When privacy or feasibility issues prevent real data from being used, synthetic data is frequently employed. Although there must be strict controls on the dataset access, you can produce artificial data with similar characteristics and make it publicly available with the help of Sora.

According to OpenAI, the generative video quality will also be significantly improved.

Conclusion

To sum up, the release of Sora is greatly anticipated since it represents a significant development in artificial intelligence. Both its public release and its potential uses are eagerly awaited.

Experience the new era of property marketing efficiency with Sora, revolutionizing the industry. Let’s unlock new opportunities with IQI Global, a leading proptech company. Submit your details now, and our team will contact you soon!